This blog post is an introduction to the world of GPU programming with CUDA. We will cover the fundamental concepts and tools necessary to get started with CUDA, including:

- The steps involved in a typical GPU program, such as allocating storage on the GPU, transferring data between the CPU and GPU, and launching kernels on the GPU to process the data.

- How to use the Nvidia C Compiler (nvcc) to compile CUDA code and follow conventions like naming GPU data with a “d” prefix.

- Key functions like cudaMalloc and cudaMemcpy that are used to allocate GPU memory and transfer data between the host and device.

- The kernel launch operator and how to set the number of blocks and threads in the grid, as well as how to pass arguments to the kernel function.

- The importance of error checking in CUDA code.

To help illustrate these concepts, provided a simple example code that computes the squares of 64 numbers using CUDA. By the end of this post, you will have a basic foundation in GPU programming with CUDA and be ready to write your own programs and experience the performance benefits of using the GPU for parallel processing.

In my previous post I wrote about an introduction to parallel programming with CUDA. In this post explaining a simple example CUDA code to compute squares of 64 numbers. A typical GPU program consists of following steps.

1- CPU allocates storage on GPU

2- CPU copies input data from CPU to GPU

3- CPU launch kernels on GPU to process the data

4- CPU copies result back to CPU from GPU

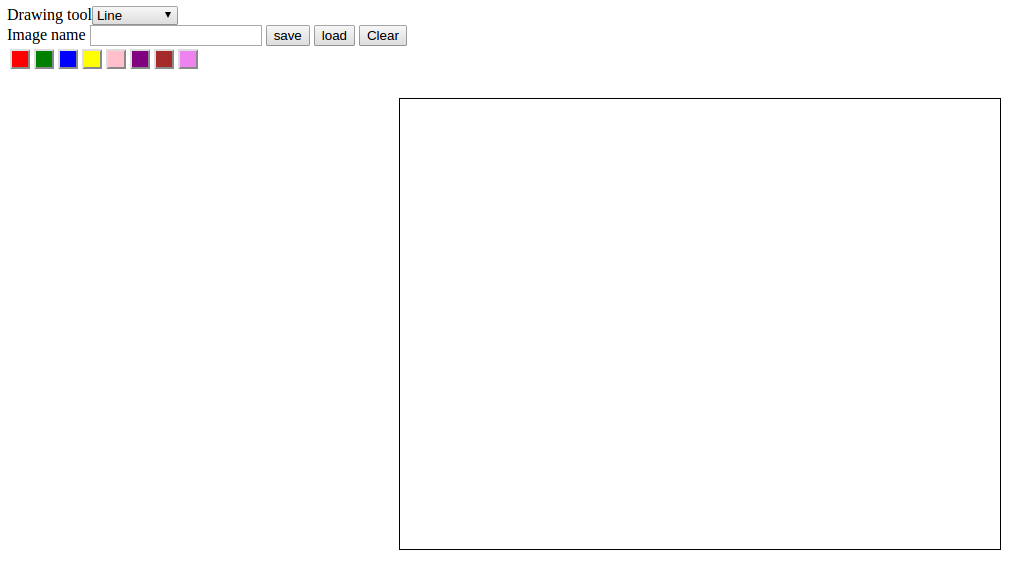

Here is instead of running the regular C compiler we are running nvcc, the Nvidia C Compiler. The output is going to go an executable called square and our input file is “square.cu”. cu is the convention for how we name.Source code is available on github

We are going to walk through the CPU code first.